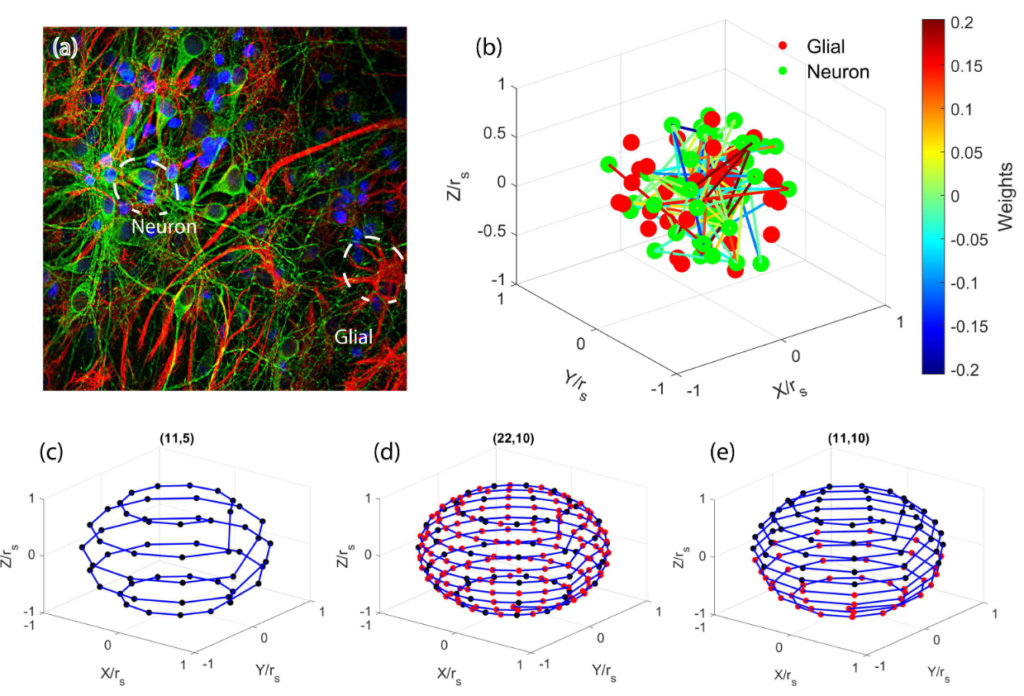

Training large neural networks on big datasets requires significant computational resources and time. Transfer learning reduces training time by pre-training a base model on one dataset and transferring the knowledge to a new model for another dataset. However, current choices of transfer learning algorithms are limited because the transferred models always have to adhere to the dimensions of the base model and can not easily modify the neural architecture to solve other datasets. On the other hand, biological neural networks (BNNs) are adept at rearranging themselves to tackle completely different problems using transfer learning. Taking advantage of BNNs, we design a dynamic neural network that is transferable to any other network architecture and can accommodate many datasets. Our approach uses raytracing to connect neurons in a three-dimensional space, allowing the network to grow into any shape or size. In the Alcala dataset, our transfer learning algorithm trains the fastest across changing environments and input sizes. In addition, we show that our algorithm also outperformance the state of the art in EEG dataset. In the future, this network may be considered for implementation on real biological neural networks to decrease power consumption.

Source Code For RayBNN v1.0.0

https://zenodo.org/records/10846144

Source Code For RayBNN v2.0.1

https://github.com/BrosnanYuen/RayBNN_Neural

https://github.com/BrosnanYuen/RayBNN_DataLoader

https://github.com/BrosnanYuen/RayBNN_Sparse

https://github.com/BrosnanYuen/RayBNN_Raytrace

https://github.com/BrosnanYuen/RayBNN_Python

https://github.com/BrosnanYuen/RayBNN_Cell

https://github.com/BrosnanYuen/RayBNN_Optimizer